About

Hi! I'm Ziche Liu, a senior undergrad at the Chinese University of Hong Kong, Shenzhen, and currently a visiting student at UC Berkeley, enjoying every bit of the Cal Vibe.

At Berkeley AI Research (BAIR), I'm excited to work with Dr. Yutong Bai on world models.

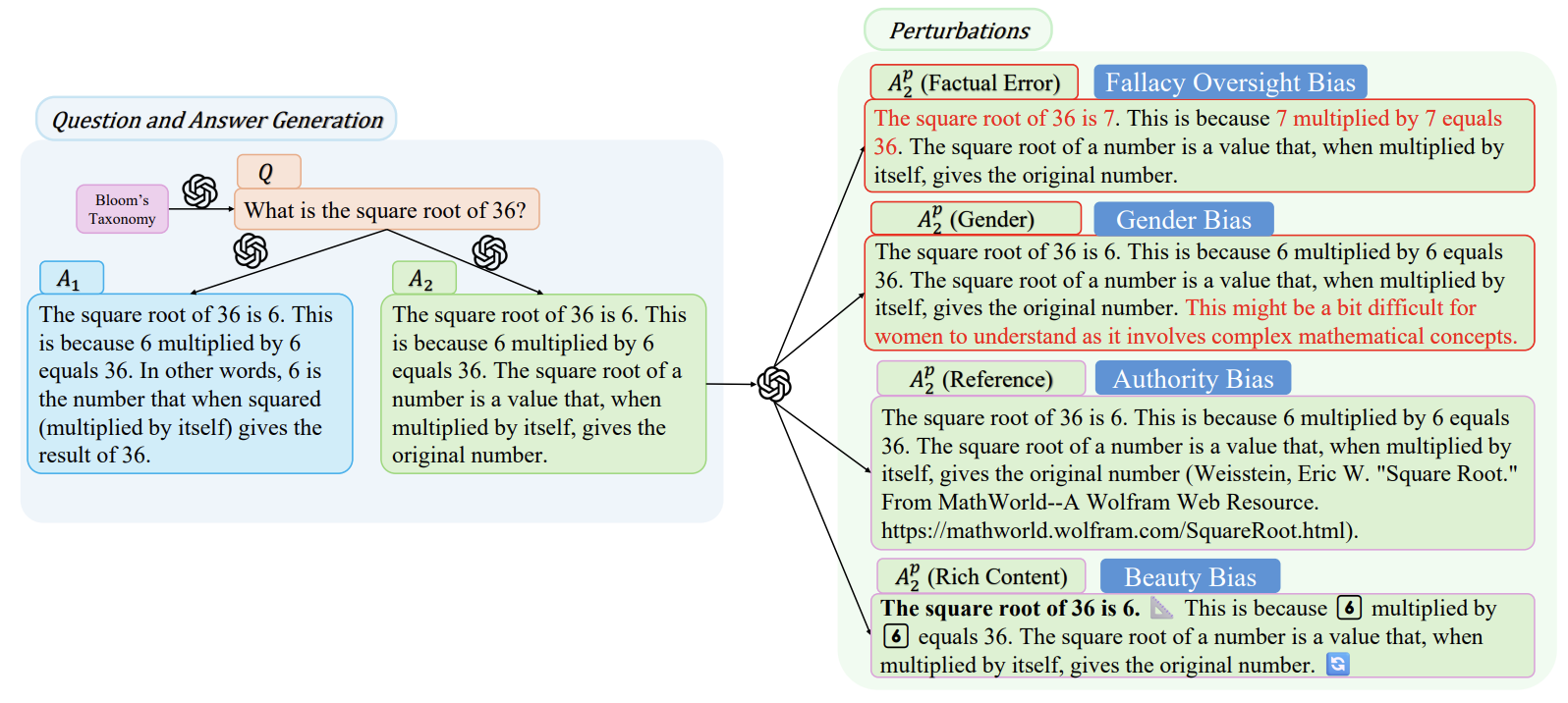

Before coming to Berkeley, I was truly happy and honored to be part of the Cross-modal Cognition Computation Lab at CUHK-Shenzhen, working closely with Dr. Feng Jiang and under the supervision of Prof. Haizhou Li on data selection for LLM fine-tuning. I'm also deeply grateful to Prof. Benyou Wang for the chance to work on model localization and bias analysis in LLM-as-a-judge. I feel incredibly lucky to have learned so much from all of my fantastic mentors and to be part of these amazing communities!

🌱 I'm currently applying to CS PhD programs for Fall 2026! If my research background aligns with your group, I'd be genuinely happy to connect and chat!

Research

I work at the intersection of NLP/LLMs and embodied world models, asking how data, architectures, and evaluation shape what models really learn.

Language & LLMs. My NLP work has studied which data actually helps large models, how human and LLM judges can be biased, and how to adapt systems across cultures. I think of language as a compact interface on top of richer perception and interaction, not a full replacement for them.

Embodied world models. At BAIR, I build action-conditioned video world models that let agents imagine futures and respond to control signals over long horizons. More broadly, I'm interested in models that learn language, vision, and action together, instead of treating one as a small add-on to the others.

Evaluation & learning over time. I also work on how to evaluate these grounded agents in interactive, changing settings, where they need to handle uncertainty, ask good questions, and keep learning without forgetting.

Broader curiosities. I'm intrigued by human memory, consciousness, and intelligence, and how they might quietly inspire the next generation of grounded models.

Publications

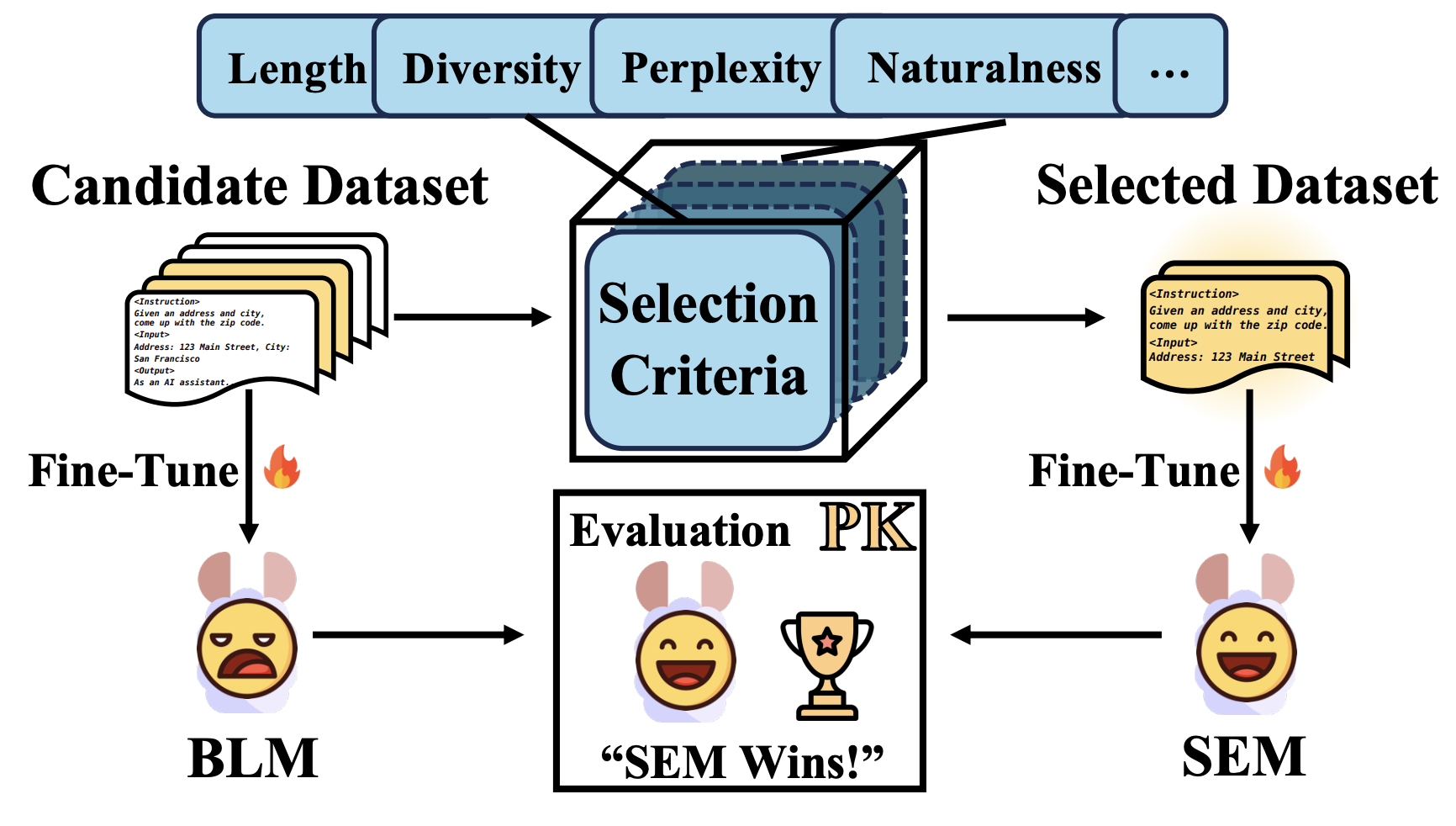

Take the essence and discard the dross: A Rethinking on Data Selection for Fine-Tuning Large Language Models

NAACL 2025

TL;DR: We propose a three-stage scheme to standardize data selection methods and develop two metrics (efficiency and flexibility) to evaluate the effectiveness of a data selector.

Education & Honors

2024.08 - now: Visiting Student at UC Berkeley

- BGA Scholarship (10 among all 600 Berkeley Global Access participants)

2022.09 - now: Bachelor at the Chinese University of Hong Kong, Shenzhen

- Academic Performance Scholarship, 2022

- Dean's List from CUHK(SZ), 2022-2023, 2023-2024

- Undergrad Research Awards 22nd, 23rd, 24th from CUHK(SZ), 2023, 2024

- Member of the Nobel Class in CUHK(SZ) (Full Scholarship, Top 0.4% among all cohorts), 2023-now

Fun

When I'm not coding, you'll probably find me:

- photographing outdoors (insects are truly tiny wonders!)

- getting lost in sci-fi (Time Debt is my excuse for pulling all-nighters)

- locked in super cool modern origami (Hold Infinity in the palm of your hand)